How to Pinpoint What Drives Early-Stage Delinquency Roll Rate

- Industry Use Cases

In the subprime lending and credit card industry, risk isn’t just a metric; it’s a dynamic, fast-moving current. You monitor portfolio health daily, but some of the most critical signals aren’t in the high-level dashboards. They’re hidden in the subtle shifts—the moments when a small problem begins to snowball into a significant loss.

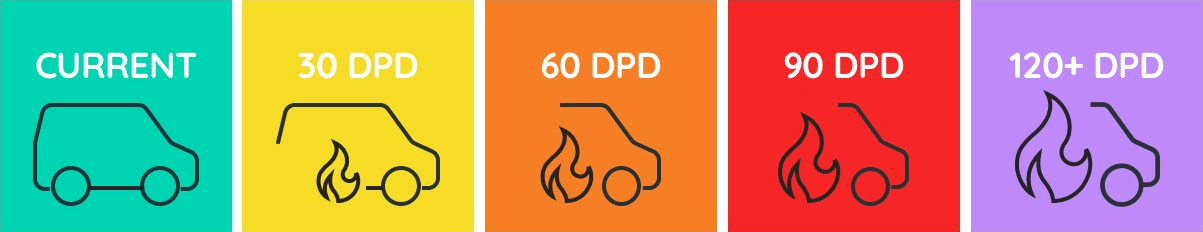

One of the most dangerous of these is the early-stage delinquency roll rates. This is the percentage of your borrowers who “roll” from one delinquency bucket to the next in a given month, for instance, from 30 days past due to 60 days past due (DPD). While a 90-day delinquency is a clear signal of potential losses, the battle is often won or lost in those first 60 days. A rising roll rate is a leading indicator of future charge-offs, increased provisioning costs, and stress on your collections resources.

For a subprime lender with a lean team, the question is stark: You see higher roll rates. You know the “what.” But do you know the “why”? And can you find a solution quickly enough? Pinpointing the precise drivers and factors behind these rising or falling roll rates in a portfolio of thousands of loans is a monumental task. The answer isn’t buried in a single column of data; it’s woven into the complex interactions between dozens of variables across your entire business.

This article examines the crucial importance of delinquency roll rate analysis, the prohibitive costs and limitations of traditional tools for this task, and how a new approach—Statistical AI—can enable lenders to stay ahead of the delinquency curve.

Let’s be clear: monitoring roll rates in early delinquency stages isn’t an academic exercise. It has a direct and immediate impact on your bottom line. According to data from the Federal Reserve Bank of New York, as of early 2024, an increasing share of auto loan debt has been transitioning into delinquency, with rates for lower-income borrowers surpassing their pre-pandemic peaks. This macro trend puts immense pressure on individual lenders to understand their micro-trends.

Imagine your lending portfolio has 10,000 active accounts in the 30 DPD bucket this month. A typical 30- to 60-day DPD roll rate might be 25%. That means you can expect 2,500 of those accounts to become 60-day delinquent accounts next month.

Now, what if that roll rate creeps up to 30%?

That’s an additional 500 accounts rolling into a more severe stage of delinquency. For example, the average balance is $15,000; that’s an extra $7.5 million in portfolio value that has just moved significantly closer to becoming a charge-off. This isn’t just a number on a report; it triggers a cascade of consequences:

The core challenge is that the cause is rarely simple. It’s not just “FICO scores below 620.” It’s a combination of factors. Perhaps they are loans originated through a specific channel in a certain geography, combined with a particular payment method, and a recent lull in collections outreach. Identifying these multi-dimensional drivers is where traditional roll rate analysis tools often struggle.

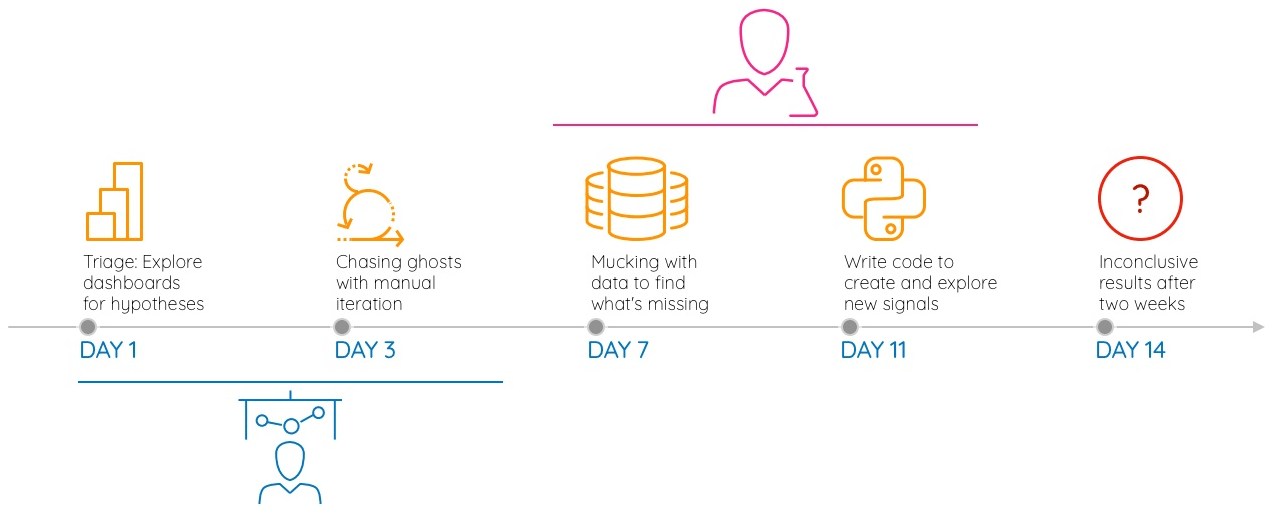

When a critical KPI, such as the 30-to-60 DPD roll rates, suddenly jumps, the clock starts ticking. Every day that passes without a clear answer is a day when the problem worsens and becomes more expensive. Let’s create a realistic example to illustrate the challenges and quantify the costs.

The Scenario:

You are the Chief Risk Officer at a subprime auto lender with a $1 billion portfolio. Your 30 DPD bucket typically holds around $80 million in active loans. Your analytics capabilities are lean but competent: you have one sharp BI Analyst who is a wizard with Tableau, and one Data Analyst who handles more complex SQL queries and reporting.

This month, the 30-to-60 DPD roll rate jumps from its historical average of 22% to 25%. This 3-point increase means an additional $2.4 million ($80M * 3%) has just rolled into a more severe delinquency stage than expected. You need answers, fast. Here is what would unfold using traditional methods.

The first call goes to your BI Analyst. Their mission is to use the company’s interactive dashboards to find the source of the bleeding.

The Process:

Quantifying the Cost of Phase 1:

Unsatisfied with the BI findings, you escalate the request to your Data Analyst, the only person who can write code to query the production databases directly.

The Process:

Quantifying the Cost of Phase 2:

Throughout this process, you’ve also checked your Loan Management System’s (LMS) built-in analytics module. It provided the initial alert but offers no path forward. It’s canned reports on vintage performance and risk grades confirmed the problem, but couldn’t explain it. The LMS cannot ingest and analyze data from alternative vendors or other non-standard sources. This isn’t a cost of time, but a cost of strategic paralysis. You are locked into your vendor’s predefined view of risk, unable to adapt or investigate emerging threats that fall outside their standard reports.

To get ahead of the delinquency snowball, you need to be both deep and fast. This requires a new approach that automates the discovery process, empowering business experts to find answers without writing a single line of code. This is the power of Statistical AI.

At dotData, we’ve engineered our dotData Insight platform around this concept. Statistical AI applies machine learning not for prediction, but for discovery. It acts as an automated data analyst, sifting through all your raw data to find the hidden signals—the “business drivers”—that have the most significant impact on your KPIs.

Imagine you want to understand why your 30-to-60 DPD roll rate has increased. Here’s how the process changes with Statistical AI:

Suddenly, the “why” becomes crystal clear. You might discover drivers like:

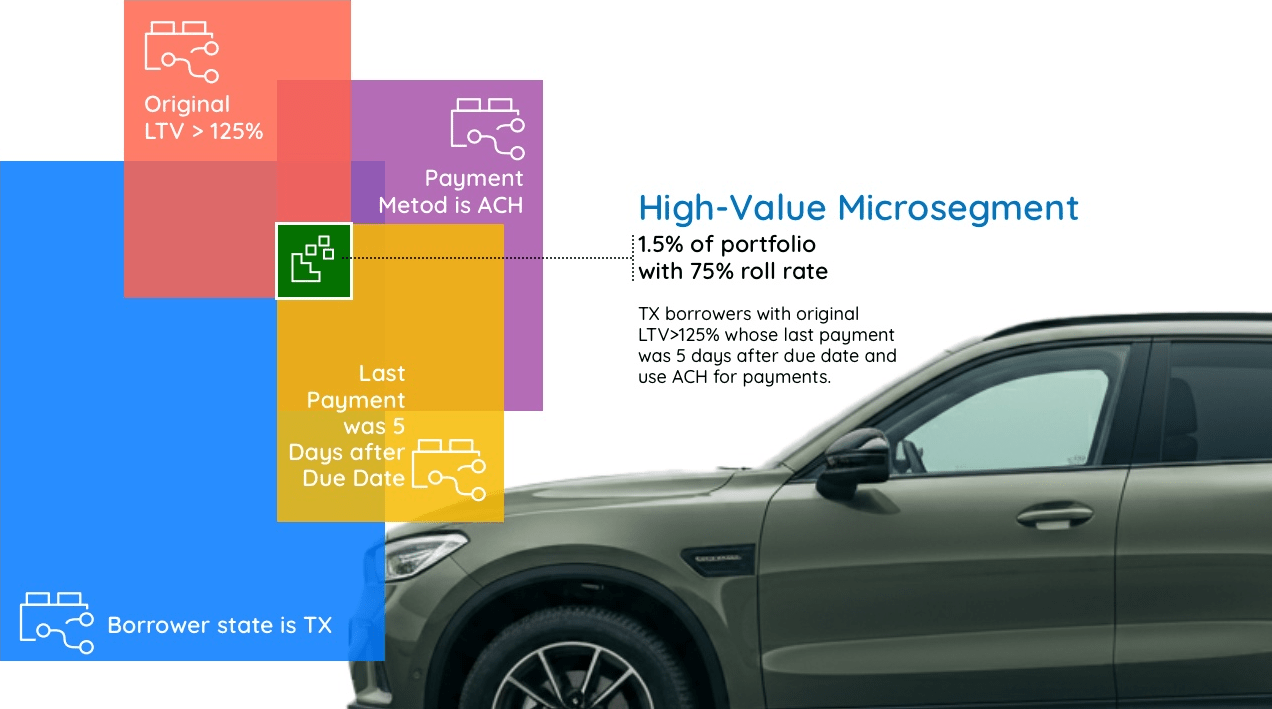

Discovering these individual drivers or factors is the first step. The real value comes when a business user—a risk manager or collections director with deep domain knowledge—can interactively combine them to build powerful micro-segments without writing any code.

This is where you move from analysis to action. Using dotData Insight, the manager can start by selecting the most potent single driver from the list: Borrower’s State is Texas. The system instantly shows this segment has a 42% roll rate.

This isn’t deep enough. So, they add (or “stack”) a second driver on top of it: Original LTV is > 125%.

The platform recalculates in real-time. This new, combined segment of Texas borrowers with an LTV over 125% is smaller, but their roll rate has jumped to 55%. They are getting closer to the core of the problem.

Let’s add a third driver: Payment Method is Manual ACH.

Again, the system instantly recalculates. This hyper-targeted micro-segment may only represent 1.5% of your 30-day DPD portfolio, but its roll rate is a staggering 75%.

This is the actionable insight that was impossible to find with traditional methods. It’s no longer a vague problem; it’s a specific, identifiable group of borrowers who need a completely different collections strategy, and they need it now. This group warrants:

This is how lean, agile lenders can outperform the competition. While others are spending weeks trying to diagnose a problem, you can identify, isolate, and act on your highest-risk segments in a matter of hours.

The subprime lending environment will only become more complex, especially during economic downturns. Relying on an analytics playbook from the last decade is no longer a viable strategy for managing risk in the modern world. The actual cost of traditional methods isn’t just the salary of your analysts; it’s the millions in preventable losses that accumulate while you wait for answers.

Let’s revisit the math. The traditional, three-week investigation cost nearly $10,000 in direct personnel costs. If a similar deep dive is needed every month, you’re spending over $120,000 a year just to keep your head above water on this single issue, while your most valuable analysts are trapped in a cycle of reactive fire-fighting.

The real damage is in the delayed action. During those three weeks, you failed to treat an additional $2.4 million in high-risk loans effectively. A targeted, timely collections strategy could reduce the ultimate charge-off rate on this toxic segment by 20 percentage points. The cost of your delay—the failure to act—is a likelihood of $480,000 ($2.4M * 20%) in preventable losses, from a single monthly cohort.

Now, compared to the dotData approach, an AI-powered discovery platform can deliver the key drivers and micro-segments in under a day. Your team can move immediately to design and implement a targeted strategy, engaging that $2.4 million cohort while there is still time to influence outcomes.

Even with an annual subscription fee, the ROI is overwhelming. Preventing that single $480,000 loss from one month’s analysis more than pays for the platform for the entire year. More importantly, it frees your expert team from the drudgery of manual data work, allowing them to focus on what they do best: building strategies that protect the portfolio and drive the business forward.

The choice is clear. You can continue to pay the high price of being slow, or you can invest in the speed and depth needed to win. Stop reacting to the delinquency snowball. Start anticipating it by deploying your resources with precision that protects your portfolio and secures your bottom line.

Ready to see the hidden drivers impacting your roll rates? Learn how dotData Insight can provide the clarity you need to act decisively.