GenAI Security: Striking the Right Balance Between Innovation and Protection

- Technical Posts

Generative AI (GenAI), particularly large language models (LLMs), are reshaping how we work and transforming industries. From enhancing productivity in day-to-day operations to powering transformative customer-facing features, the momentum toward adopting AI-driven technologies is strong—and growing stronger.

But a great opportunity comes with great responsibility. Thanks to GenAI, productivity has improved, but it also comes with new security challenges that traditional software systems were never designed to handle. At dotData, where we build enterprise-grade AI solutions, we constantly face the challenge of balancing innovation (“offense”) with robust governance and protection (“defense”).

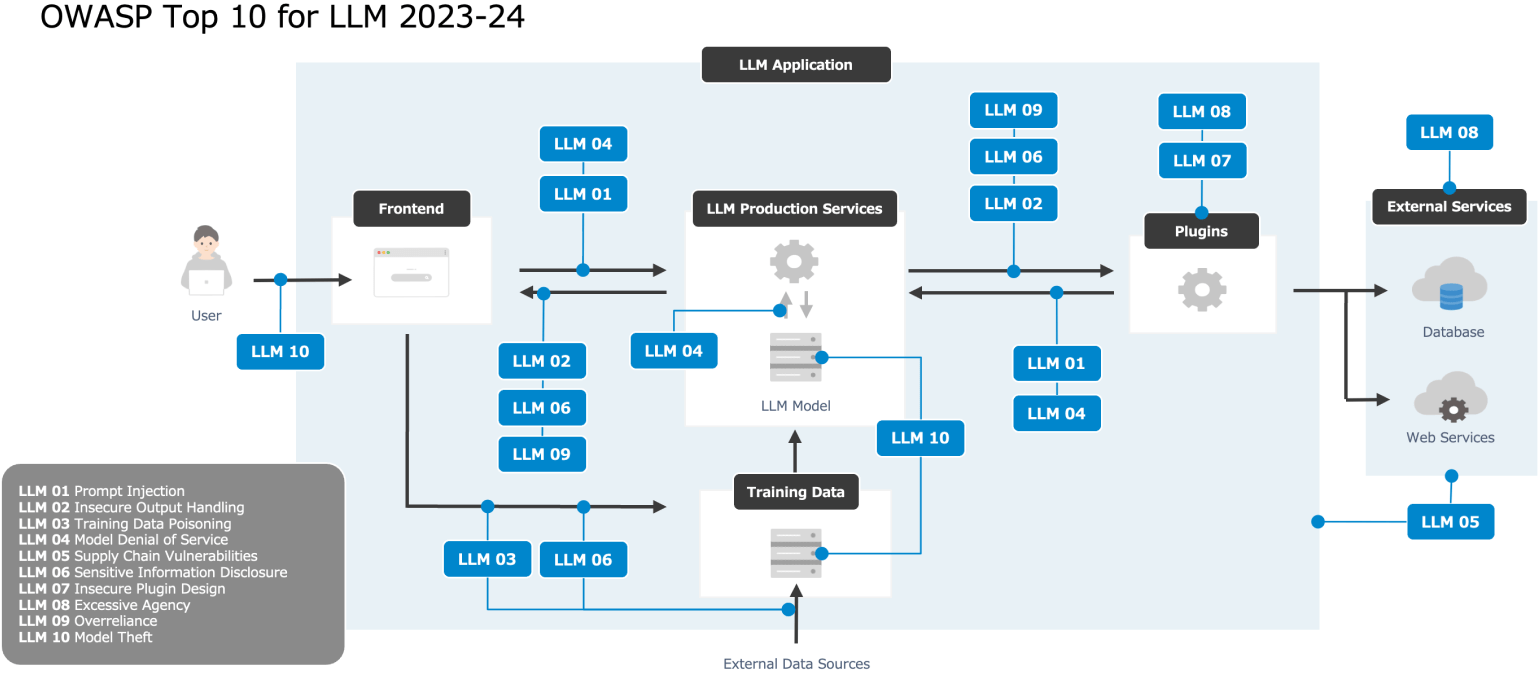

In this post, we’ll explore why LLM security is uniquely difficult, what organizations can learn from the OWASP Top 10 for LLM Applications, and how dotData is tackling these security challenges across both product and organizational levels.

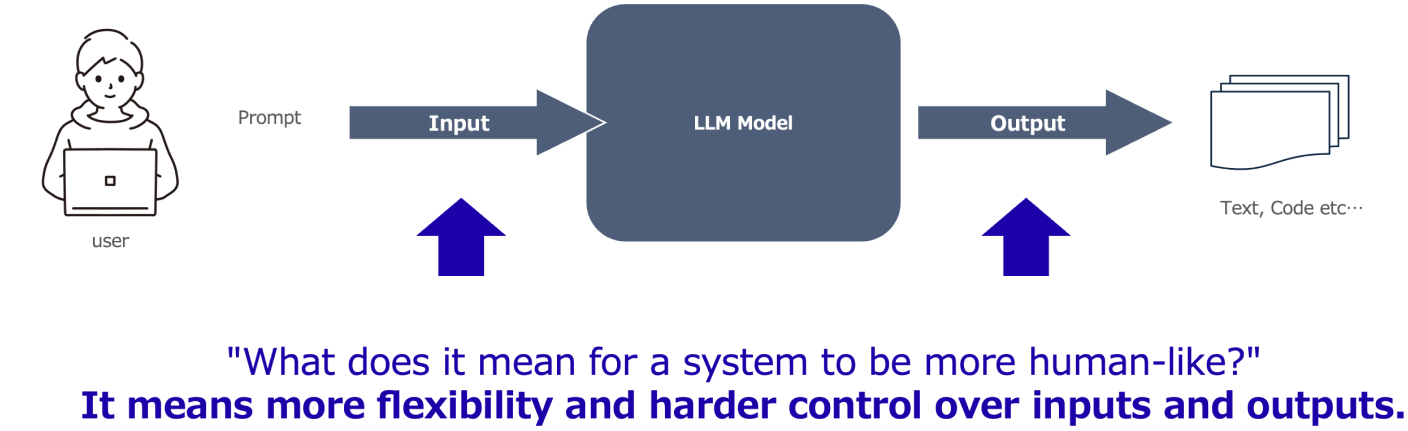

Traditional software systems operate under predictable rules: for the same input, you get the same output. Determinism allows for well-defined and testable security models. If unexpected behavior emerges, it’s typically considered a bug.

LLMs, by contrast, behave more like humans. They generate different outputs even with the same input, influenced by factors like AI model architecture, context, temperature settings, or even prompt history. This non-deterministic nature makes input/output control far more difficult — and poses potential threats such as prompt injection, output leakage, and model exploitation. Without proper security posture, it can result in critical vulnerabilities and serious consequences.

Despite these differences, many GenAI security best practices remain relevant:

However, what’s new is the scale and unpredictability of risk. That’s where modern AI security frameworks like OWASP’s Top 10 for LLM and Generative AI Applications come into play.

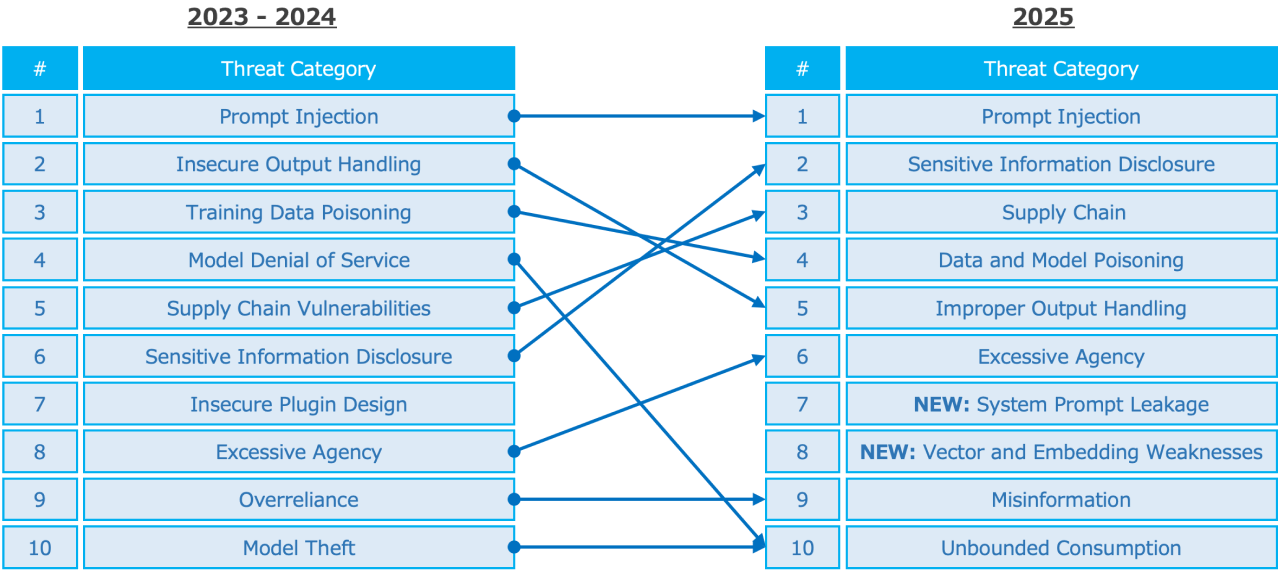

The OWASP Top 10 for LLM and Generative AI Applications (updated in late 2024 for 2025) provides a practical, evolving framework for risk identification and cybersecurity threat mitigation unique to generative AI systems.

Notably, the 2025 edition introduces two new threats:

Unlike user prompts, system prompts define model behavior under the hood. If these contain credentials or sensitive data, the risk posed by these data leakages is severe. Clear separation and redaction are essential for data protection.

Particularly relevant for Retrieval-Augmented Generation (RAG), this includes risks like vector database poisoning or embedding manipulation that may allow adversaries to “trick” the model into revealing unintended content.

In addition to these new entries, terminology updates better reflect real-world issues. For example, “Insecure Output Handling” has been refined to “Improper Output Handling,” acknowledging the complexity of context-aware generative behavior.

These changes reflect how quickly real-world implementation of LLMs is maturing and why continuous monitoring and risk reassessment in security operations is necessary for threat detection and reinforcement of Generative AI security.

The past year has seen rapid LLM innovation: multi-modal models (text, image, code), lighter and faster models optimized for production, and expanding open-source ecosystems (e.g., LLaMA, DeepSeek).

Yet this rapid progress comes with emerging threats. Prompt injection vulnerabilities have been observed in tools like Slack AI and Microsoft Copilot. Public security concerns around data leakage, such as early reports involving DeepSeek, underscore the reputational risks tied to AI tool usage.

On the regulatory front:

This landscape creates complexity in security controls for enterprises managing global deployments.

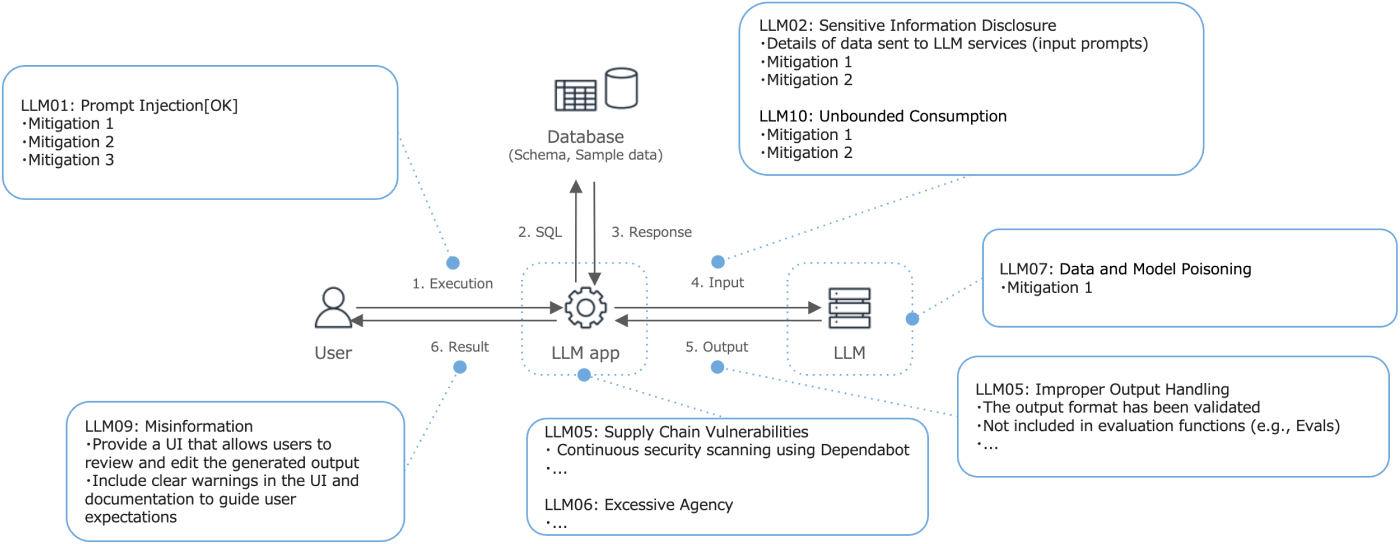

At dotData, we serve data-driven enterprises. Trust is non-negotiable, so security is embedded into our product development lifecycle across technical, organizational, and governance dimensions.

To accommodate varying customer needs, we offer:

These GenAI security measures allow organizations across industries—including finance and government—to adopt generative AI on their own terms.

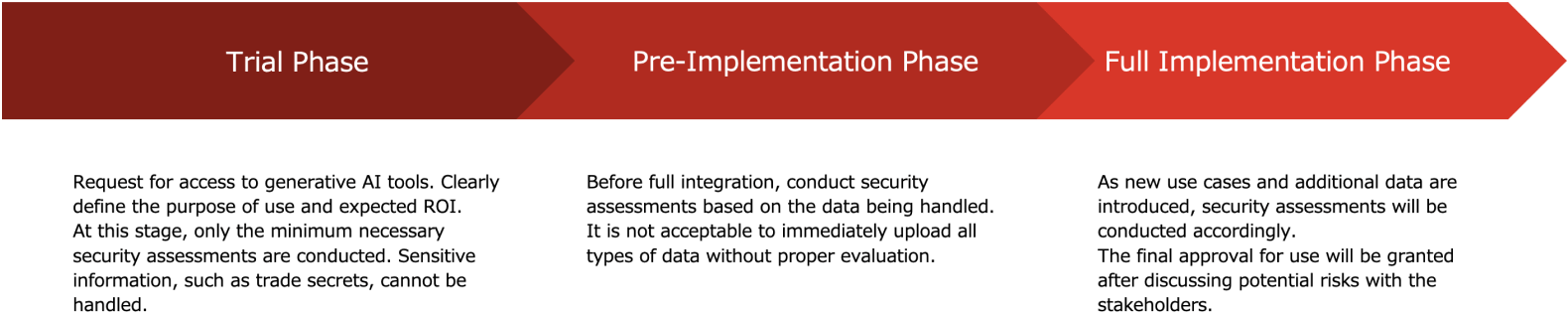

One of the biggest organizational dilemmas is the balance between AI innovation speed and security assessment processes. Many large enterprises have robust security assessments for software onboarding — often taking weeks or months. This can hinder innovation.

A process that is too flexible increases risks. But if too rigid, it can limit progress. The answer lies in graduated risk management:

This structured, risk-based approach allows organizations to test and deploy new GenAI capabilities without waiting for a “perfect” security framework.

GenAI brings exciting opportunities for automation, decision support, and service innovation. But as GenAI capabilities grow, so do risks.

Emerging technologies like Model Context Protocol (MCP)—which allow LLMs to interact with external tools—will require new trust and evaluation frameworks. Much like open-source libraries, plugins, and tools that interface with LLMs need scrutiny. But evaluating trust in an AI that can hallucinate adds a new layer of complexity.

Ultimately, zero AI risk is impossible. Organizations must develop an overall security posture of informed risk acceptance, grounded in robust AI governance, but still flexible enough to allow AI development and transformation.

At dotData, we believe that the future of AI lies not in choosing between “move fast” and “stay safe,” but in mastering both intelligently and responsibly.