How Automation Solves the Biggest Pain Points in Data Science

- Thought Leadership

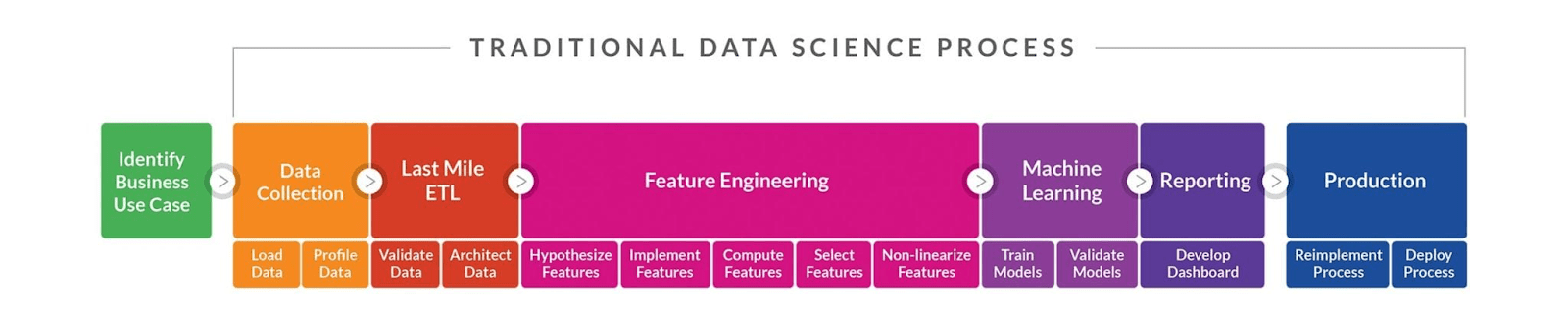

While most of the attention in the world of AI and Machine Learning is on the algorithms themselves, most data scientists often worry not about the outcome, but instead on the steps involved in arriving at that outcome. The reason for this is simple: building AI and ML models is tedious, complicated, requires a multitude of subject matter experts, and is a highly manual process. In our blogs, we have often highlighted the multiple steps necessary to build useful AI and ML models through data science. Today’s article focuses on what data science teams can do to accelerate the building of models, while still achieving the goal of building valuable AI/ML models. As a refresher, below is an illustration of the complexity and multi-step nature of the data science process. To understand the benefits of automation in data science, we first have to know where the most manual work is involved, and how automation could benefit.

At the heart of all AI/ML models is data – lots of data. In most cases, the data necessary to build AI/ML models live in disparate systems, often made by different vendors, each with its own set of data tables, structures, and fields of data. Before you even get to your AI/Ml model, you most likely started by building a data warehouse to collect, consolidate, and normalize all those systems into one unified repository – problem solved? Not quite.

The problem with most data warehouse deployments is that IT departments do not build them with a single purpose in mind. It’s rare to build a data warehouse just to build AI/ML models; instead, it typically supports a host of initiatives from ad-hoc reporting to business intelligence to AI/ML development. That’s where the problem for AI/ML begins. Because data warehouses need to support a broad range of use-cases, much of the data prep and data cleansing needed for AI/ML use-cases is missing. Connecting to your data warehouse and prepping and cleaning your data with AI/ML development in mind is the first step in the manual data science process. Automation can provide significant time savings by identifying and correcting gaps in data and problems that may skew models.

Although data prep and cleansing are significant hurdles in the AI/ML development lifecycle, they pale in comparison to the effort needed to build feature tables. For data science, the challenge is that even in a data warehouse, information is available in relational tables that combine data in modular ways to allow for a multitude of use-cases and reporting scenarios. The data science workflow, on the other hand, requires a purpose-built flat table with all relevant columns of data needed to build the AI/ML models. Building this flat table is the most critical – and most time-consuming – step in the data science process.

Beyond the technical complexities of building SQL code that efficiently combines multiple columns from multiple tables, there is also the challenge of the usefulness of the columns retrieved. For example, when developing a customer churn model, having a column of data that quantifies how much revenue a customer is worth would be beneficial, but having a column that identifies a client’s main phone number, on the other hand, might not be necessary. This type of hypothesis, test, validation, and repeat process is a critical part of building a useful feature table and requires much time and the involvement of subject matter experts that are familiar with the data and can provide guidance as to which columns might give the most benefit.

Automating the feature engineering process is the ultimate goal of any data science automation platform. Early attempts at this were limited to using already built “features” to derive new ones. For example, a feature table with total revenue and number of orders might help create “average order value” through a simple mathematical equation. This type of feature creation is valuable but ultimately does not save any time in developing the original feature table necessary. However, recent advances in AutoML platforms have allowed the creation of new platforms that can leverage

AI to build feature tables “on the fly.” This new type of “AutoML 2.0” products connect directly to your data warehouse and leverage AI to dynamically develop and evaluate millions of possible feature table combinations. Even with this approach, however, it’s essential also to have a second, critical step: feature evaluation. That’s because using “brute force” to build every possible feature is not valuable, since most features will be of little or no use. Again, an AI-based evaluation and scoring system can help the system determine which AI-discovered features are most likely beneficial to our model.

When discussing data science automation, most people immediately think of Automated Machine Learning – or AutoML. AutoML, however, does not address the entire spectrum of challenges in the data science process. Where traditional AutoMl does provide benefit is in the selection and optimization of Machine Learning Algorithms. With a feature table built, AutoML tools can help select ML algorithms, train and validate models and provide visual displays to compare results in a fraction of the time needed to perform the same task by hand. But the focus should not be limited to algorithm tuning and optimization, the traditional area of automation for data science. . Instead the goal should be to leverage automation to streamline the end-to-end data science process from data collection, preprocessing to building models accurately and efficiently. The final piece of the puzzle, often overlooked, is the model deployment and operationalization. Many customers use AWS orchestration software or have custom software for operationalizing ML models. Whether it is container based deployment at the edge, REST APIs or code generation, operationalizing ML models is the last frontier that determines if AutoML will deliver value.

As discussed earlier, the data science process has many elements, some of which are easier to automate than others. While we have not covered all the possible automation available to data scientists, we have discussed some of the most critical – automating data management and the creation and evaluation of features in preparation for ML selection. By focusing on automating feature engineering and data prep, data science teams can dramatically reduce their development times and develop AI/ML models in days – instead of months.