Is No-Code AI Really Worth The Effort?

- Thought Leadership

The idea of “no-code” software has become increasingly popular in a variety of fields. The world of AI and Machine Learning (ML) development is no different. Platforms that attempt to make the process of developing AI and ML models more intuitive, less “code-heavy,” and more ubiquitous are gaining in popularity. The challenge of developing AI and ML models is one that screams for no-code or low-code solutions. AI failure rates are notorious – whether it’s VentureBeat reporting 87% failure rates for data science projects in 2019 or Gartner reporting in 2021 that only 53% of AI projects make it into production – even in AI-experienced organizations. While there are many challenges to successfully moving from “experiments” to “ROI” in the world of AI and ML, one of the biggest obstacles is the sheer complexity of the development process.

In the world of AI and ML development, “No-Code” and “Low-Code” solutions have taken a course that, in many ways, was predictable. These solutions have focused on a two-pronged approach: Make building models more visual and automate the often time-consuming process of selecting the most appropriate Machine Learning Algorithm for a given model. While these systems have received a lot of attention for their ability to ease building models, they fundamentally do not solve one of the biggest challenges in the AI/ML process that is often associated with failure.

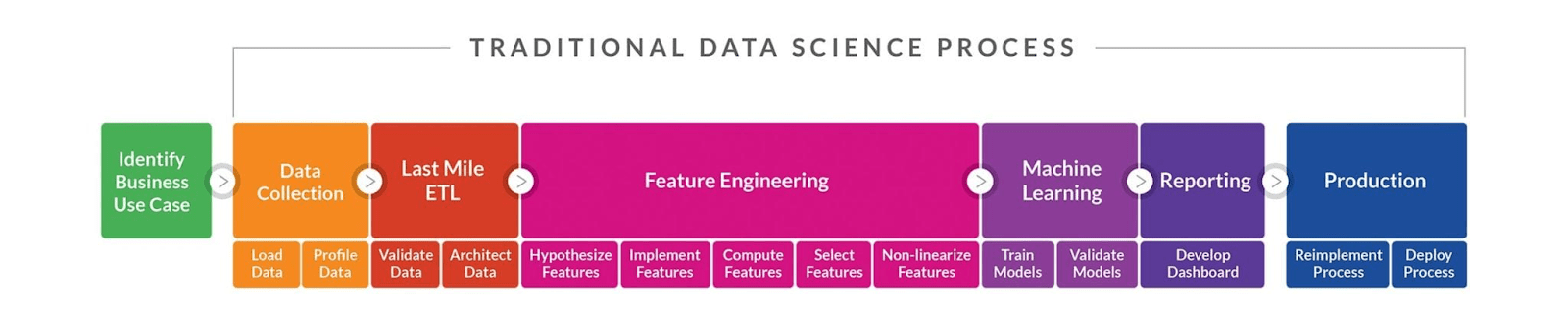

To understand the limitations of the majority of AI/ML “No-Code” platforms, we must also understand the challenges of AI development. While selecting and optimizing the most effective Machine Learning Algorithm is undoubtedly a critical step to automation, it’s not likely to yield the most significant savings in development time. The AI development process includes five distinct phases before a development team can put a model into production.

Of these five phases, the process commonly referred to as “feature engineering” is the most time-consuming and problematic. To understand the difficulty in automating feature engineering, we must delve into the complexity of data. For even the smallest business organization, data often resides in disparate systems, each with complex multi-table data storage architectures, states and complex relationships. Even a normalized data warehouse with the cleanest data and the best thought-out structure will provide enormous challenges during the feature engineering process.

The challenge of feature engineering is intrinsic to business data. “Features” essentially describe columns of data that might be useful in developing a machine learning model. For an AI team to build valuable features, traditional data science projects include many data scientists and subject matter experts – all tasked with identifying columns of data that might prove useful in the model-building process. Features are often chosen based on the best judgment of subject matter experts who can discern data columns that might provide valuable insights vs. columns that would be superfluous. This trial and error process is highly iterative and requires in-depth knowledge of available data sources. Automating the feature engineering process has traditionally been limited to two steps: deriving new columns of data from existing columns and using manual point-and-click and workflow style processes to make building feature tables simpler.

The first of these two methods is commonly adopted by what are known as “AutoML” platforms. In simple terms, AutoML platforms use mathematical calculations, often very sophisticated ones, to derive new columns by applying a statistical transformation to existing columns of data. While undoubtedly useful, these processes do not eliminate the need to build tables of features manually by identifying columns of data that might help create the core feature table needed by virtually all AutoML tools to perform their work. The second type of AI automation technique commonly used leverages visual workflow building tools to make the process of connecting to data sets and building feature tables less “code-heavy.”

Of course, the challenge is that removing code from the table building process and replacing it with visual workflows does not eliminate the need for SMEs and trial and error. The longest and most time-consuming part of the process is, in fact, the need for hypothesis, test, and experimentation in identifying, testing, and selecting columns of data that might be best suited for model development. Whether performed with code or with visual workflows, it’s still a slow and iterative process. To truly automate developing AI/ML models, we must find ways to eliminate the need for human experimentation and evaluation of features instead of finding ways to rely on automation.

AI-Powered feature engineering leverages AI to replicate the human process of identifying possible columns and testing multiple iterations, evaluating each combination of columns to find the “features” that are most likely to be helpful in any given AI model. Systems with AI-Powered feature engineering can automatically connect to enterprise data sources, evaluate columns for usefulness in given model development, and build useful features, exposing the most valuable ones automatically. By combining the automation delivered by AI-Powered feature engineering with traditional automation of ML Algorithms, platforms can provide end-to-end automation. Platforms with End-to-end automation can be leveraged by non-data scientists to build robust AI/ML models in a fraction of the time. dotData’s platforms, whether integrated into Python or self-sufficient, can deliver AI/ML models in days – vs. the months’ worth of effort needed through traditional means.

By combining traditional AutoML capabilities with AI-Powered feature engineering, AI Automation platforms help businesses of all sizes use end-to-end automation of AI development. Through such platforms, BI professionals and more traditional data experts can leverage their knowledge of BI platforms and data without learning the complicated and time-consuming data science practices necessary to build successful AI/ML models. Similarly, data scientists in larger organizations can accelerate their model development process by minimizing the amount of time needed to iterate features to find the optimal combination of data columns and ML algorithms that will yield the perfect model.